Computer Graphics for Virtual

and Augmented Reality

Lecture 09 – Interaction Methods for

Augmented Reality in Unity

Edirlei Soares de Lima

<edirlei.lima@universidadeeuropeia.pt>

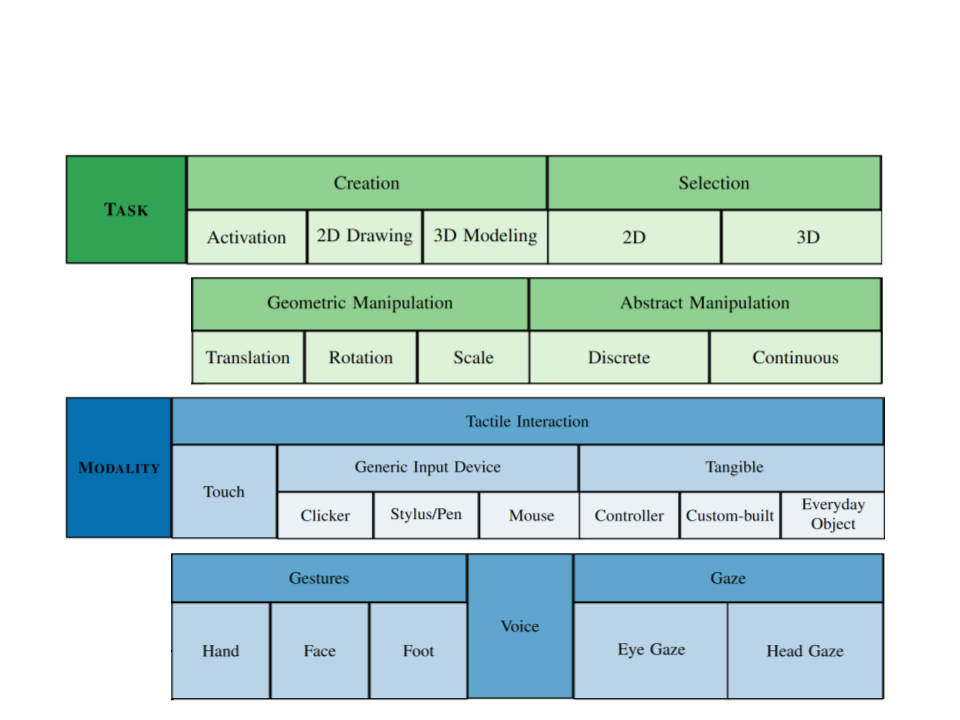

Interaction Tasks and Modalities for AR

AR Interaction Interfaces

•

•

Browsing Interfaces:

–

Virtual 2D/3D objects are registered and

displayed in real world locations.

–

Users can control the virtual viewpoint and

visualize the virtual objects from different

angles.

3D AR Interfaces:

–

Virtual 3D objects are displayed and

manipulated in the real world.

–

Placement, Selection, translation, rotations,

scaling...

AR Interaction Interfaces

•

•

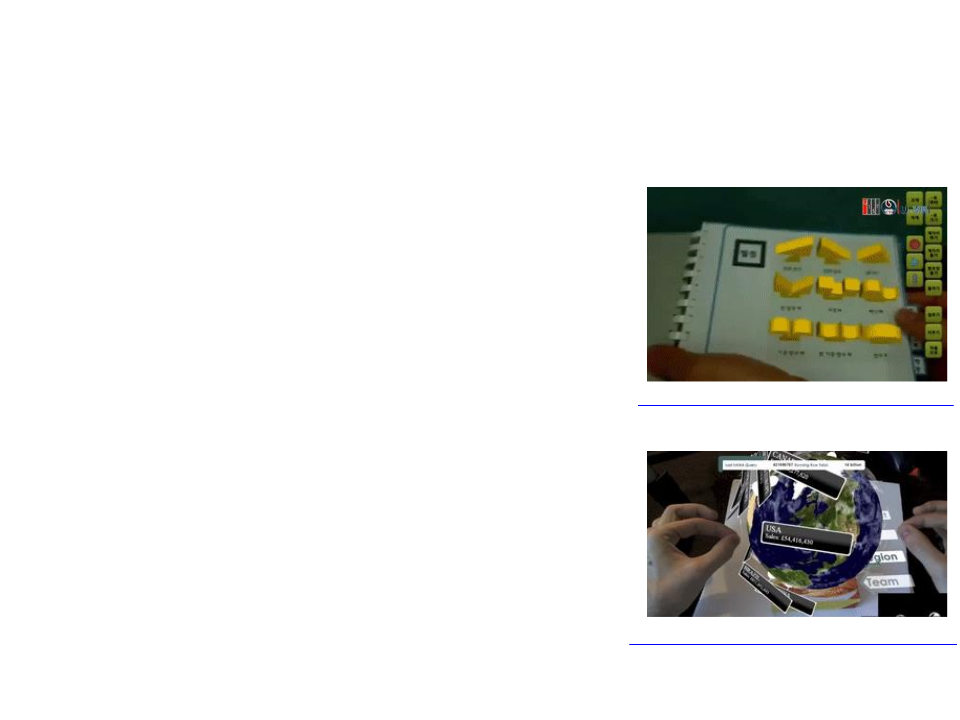

Tangible Interfaces:

–

Virtual3D objects are connected to real world

objects.

–

Users can control the virtual objects using the

physical objects.

https://www.youtube.com/watch?v=pLciqlSv0ec

Tangible AR Interfaces:

–

Physical controllers or hand tracking methods

are used to control virtual objects.

–

–

Support to multi-handed interaction.

Spatial 3D interaction methods can be

applied.

https://www.youtube.com/watch?v=8FggsGUK5iA

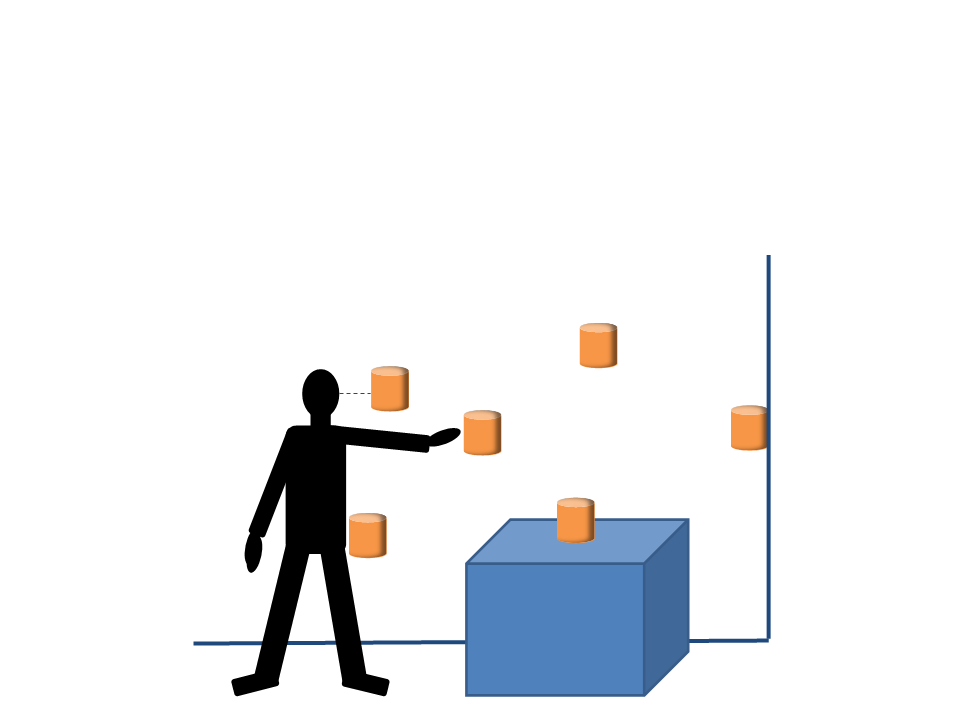

Augmentation Placement

•

Augmentations (virtual objects) can be placed relative to the

user’s head or body, or relative to the environment.

head

free-space

wall

hand

table

body

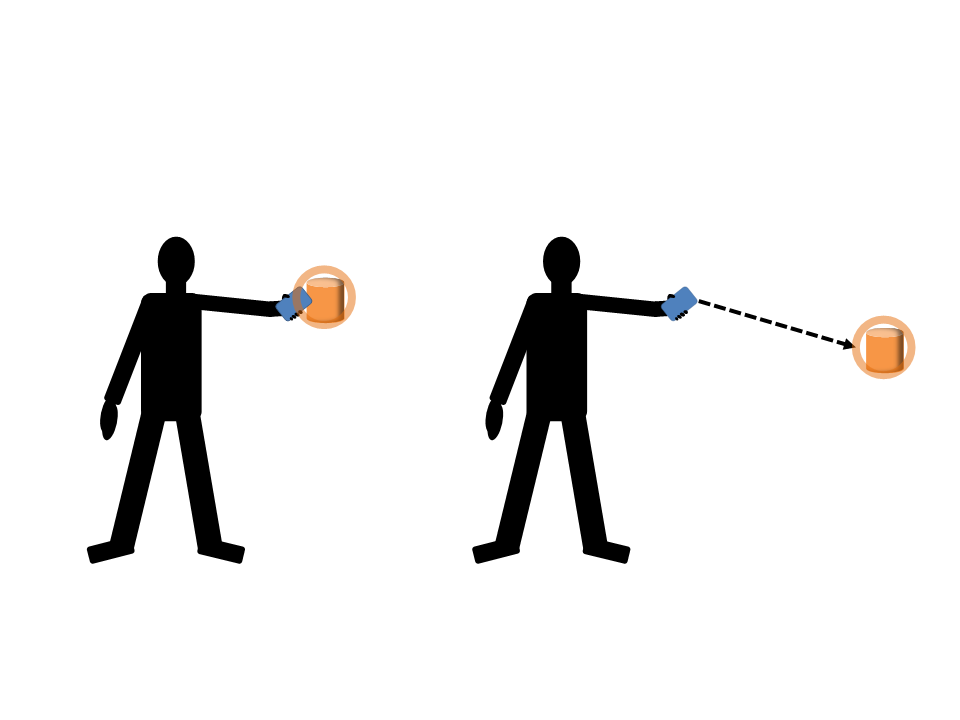

Selection by Ray-casting and Touch

Selection by Touching

Selection by Ray-casting

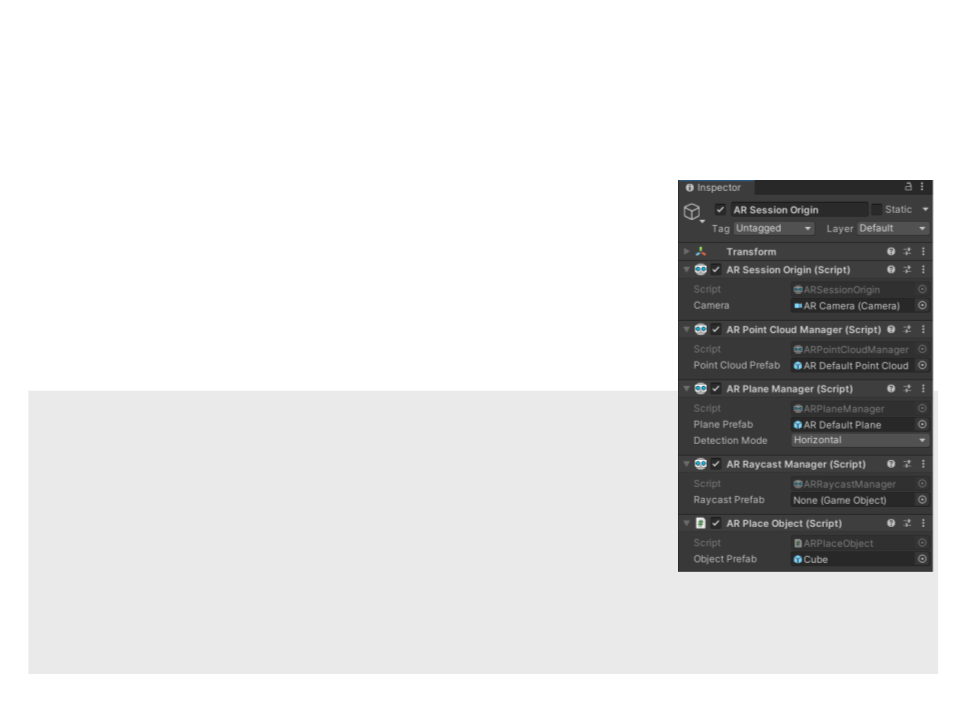

Raycast Interaction in Unity

•

•

Scene setup:

–

Add an "AR Raycast Manager" component to

the "AR Session Origin" GameObject.

Cast a ray and instantiate a GameObject:

using System.Collections.Generic;

using UnityEngine;

using UnityEngine.XR.ARFoundation;

using UnityEngine.XR.ARSubsystems;

public class ARPlaceObject : MonoBehaviour

{

public GameObject objectPrefab;

private ARRaycastManager raycastManager;

Raycast Interaction in Unity

void Start(){

raycastManager = GetComponent<ARRaycastManager>();

}

void Update(){

if (Input.touchCount > 0)

{

Touch touch = Input.GetTouch(0);

if (touch.phase == TouchPhase.Began)

{

List<ARRaycastHit> hits = new List<ARRaycastHit>();

if (raycastManager.Raycast(touch.position, hits,

TrackableType.AllTypes))

{

Pose hitPose = hits[0].pose;

Instantiate(objectPrefab, hitPose.position, hitPose.rotation);

}

}

}

}

}

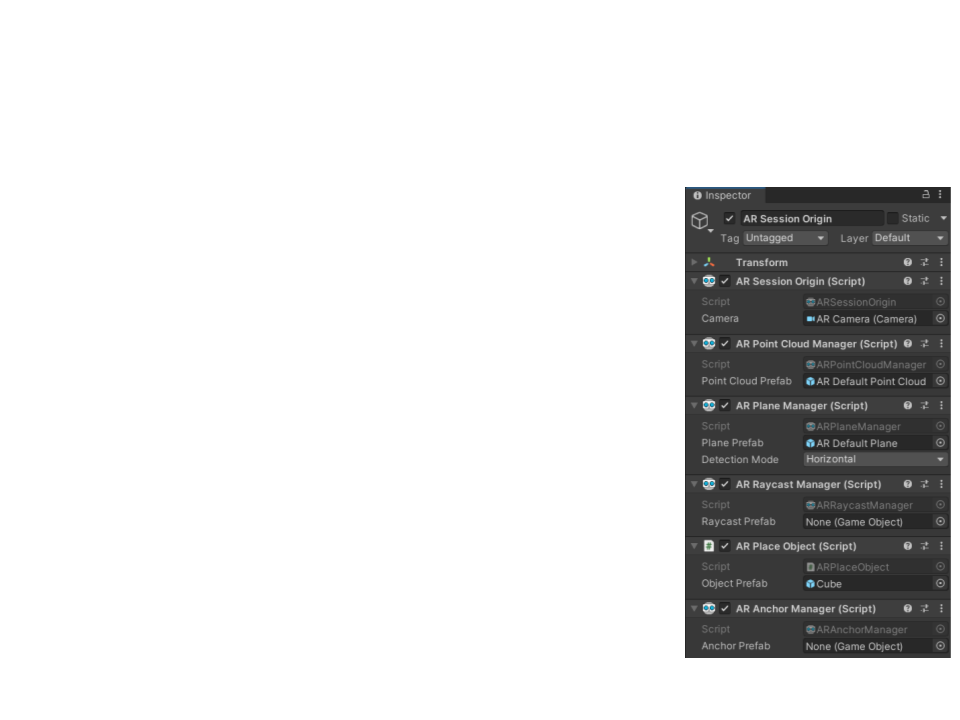

Anchors in Unity

•

The environmental understanding of ARCore

is updated during the AR experience, so

virtual objects can appear to drift away.

–

Anchors can be used to ensure that objects stay

at the same position.

–

The number of anchors impacts on performance,

so it’s important reduce the use and reuse

anchors when possible.

•

Scene setup:

–

Add an "AR Anchor Manager" component to the

AR Session Origin" GameObject.

Update the ARPlaceObject script to use Anchors.

"

–

Anchors in Unity

using System.Collections;

using System.Collections.Generic;

using UnityEngine;

using UnityEngine.XR.ARFoundation;

using UnityEngine.XR.ARSubsystems;

public class ARPlaceObject : MonoBehaviour

{

public GameObject objectPrefab;

private ARRaycastManager raycastManager;

private ARAnchorManager anchorManager;

void Start()

{

raycastManager = GetComponent<ARRaycastManager>();

anchorManager = GetComponent<ARAnchorManager>();

}

Anchors in Unity

ARAnchor CreateAnchor(ARRaycastHit hit)

{

ARAnchor anchor = null;

if (hit.trackable is ARPlane)

{

ARPlaneManager planeManager = GetComponent<ARPlaneManager>();

if (planeManager)

{

GameObject oldPrefab = anchorManager.anchorPrefab;

anchorManager.anchorPrefab = objectPrefab;

anchor = anchorManager.AttachAnchor((ARPlane)hit.trackable,

hit.pose);

anchorManager.anchorPrefab = oldPrefab;

return anchor;

}

}

else

{

GameObject instantiatedObject = Instantiate(objectPrefab,

hit.pose.position, hit.pose.rotation);

anchor = instantiatedObject.GetComponent<ARAnchor>();

Anchors in Unity

if (anchor == null)

{

anchor = instantiatedObject.AddComponent<ARAnchor>();

}

}

return anchor;

}

void Update(){

if (Input.touchCount > 0){

Touch touch = Input.GetTouch(0);

if (touch.phase == TouchPhase.Began){

List<ARRaycastHit> hits = new List<ARRaycastHit>();

if (raycastManager.Raycast(touch.position, hits,

TrackableType.AllTypes)){

CreateAnchor(hits[0]);

}

}

}

}

}

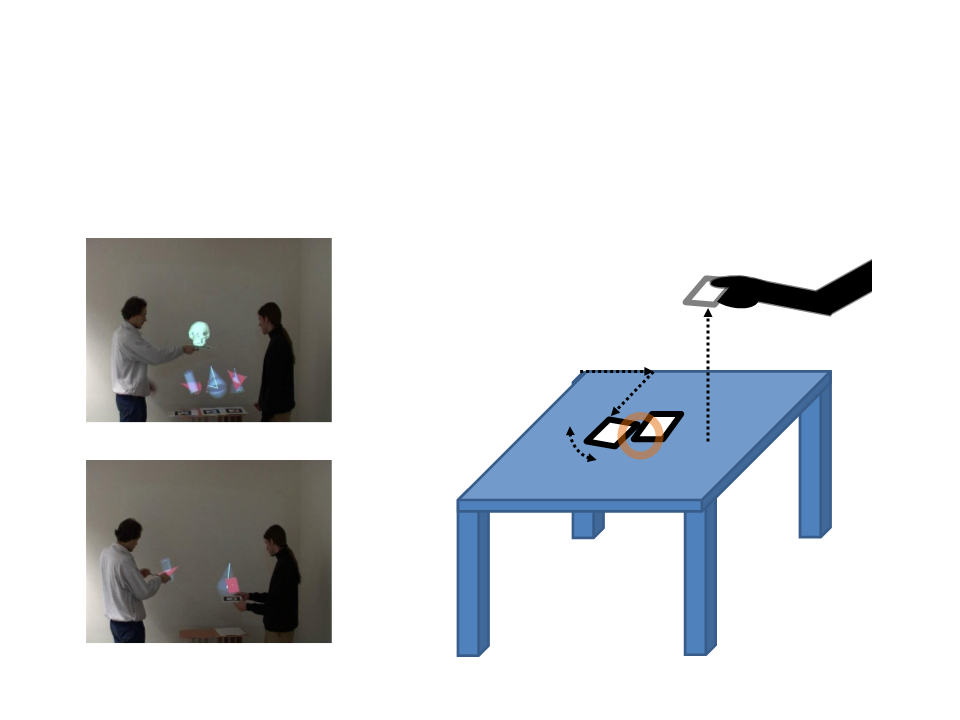

Tangible Operations

•

Markers can be used to manipulate virtual objects:

x-distance

height

y-distance

above

table

rotation

proximity

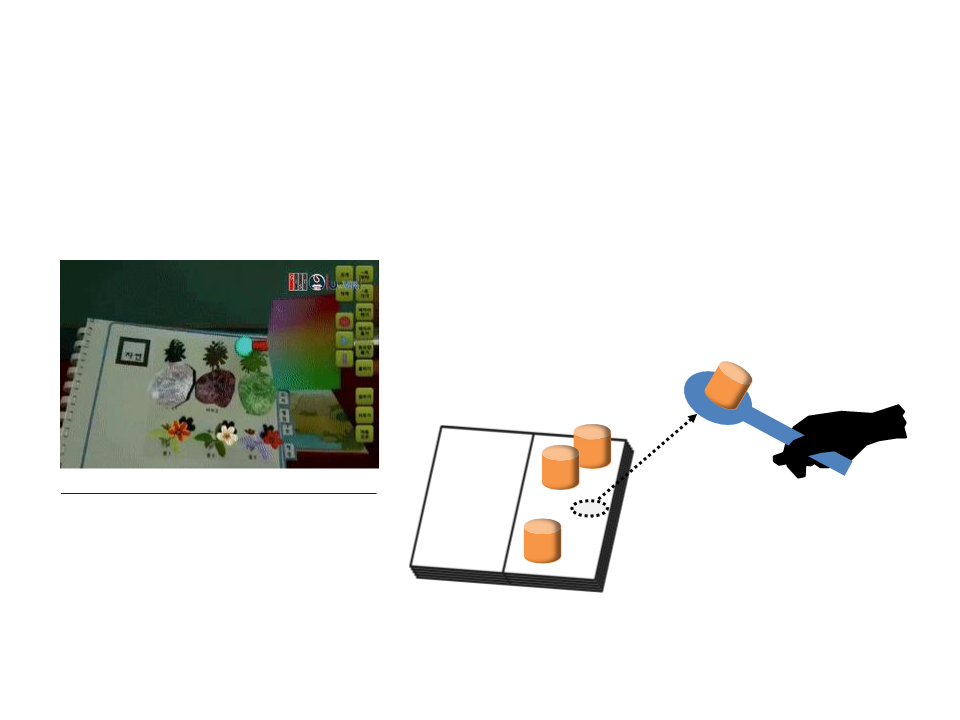

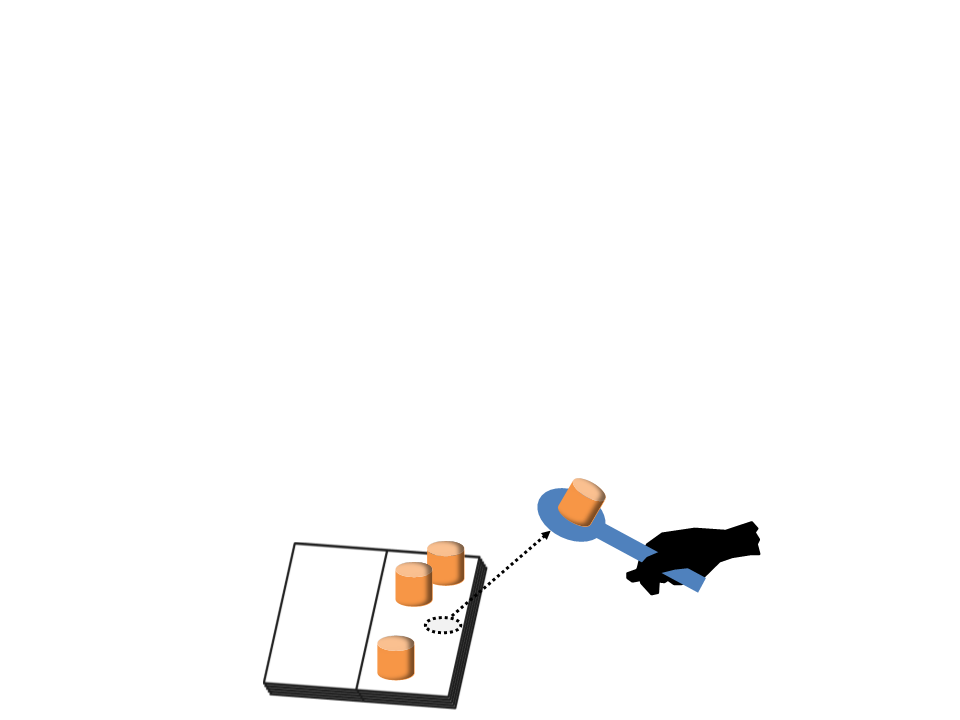

Tangible Operations

•

A paddle with markers can be used to select and pick objects:

https://www.youtube.com/watch?v=pLciqlSv0ec

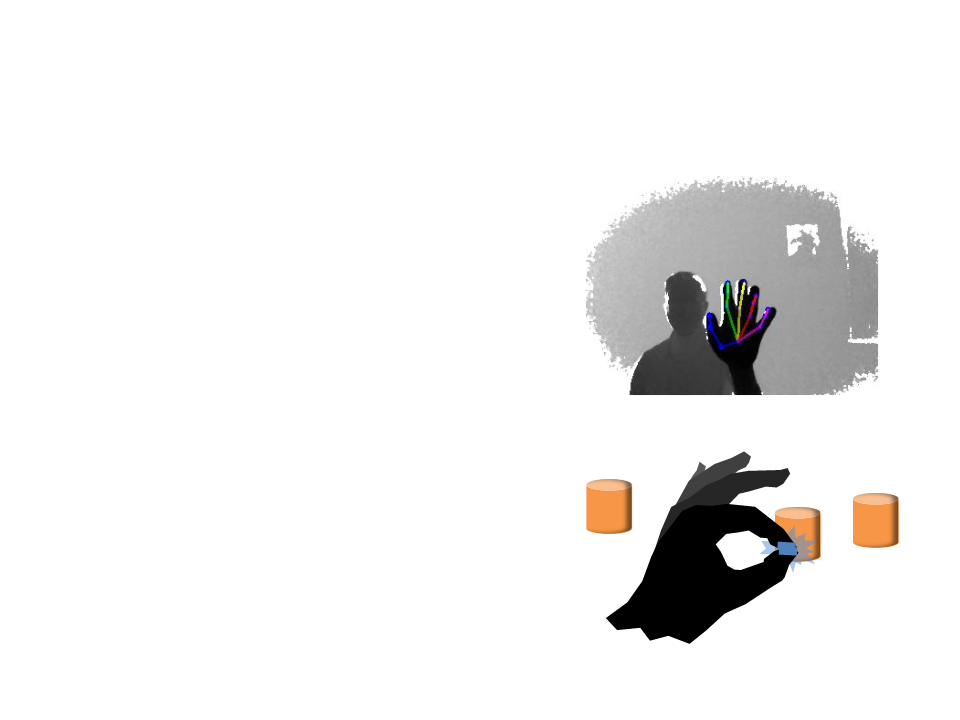

Hand Tracking

•

•

Computer vision and machine

learning algorithms can be used to

identify and track the user

hand/skeleton.

Pinch Gloves can be used to detect

when the user presses fingertips

together and interpret this gesture as

a selection.

select!

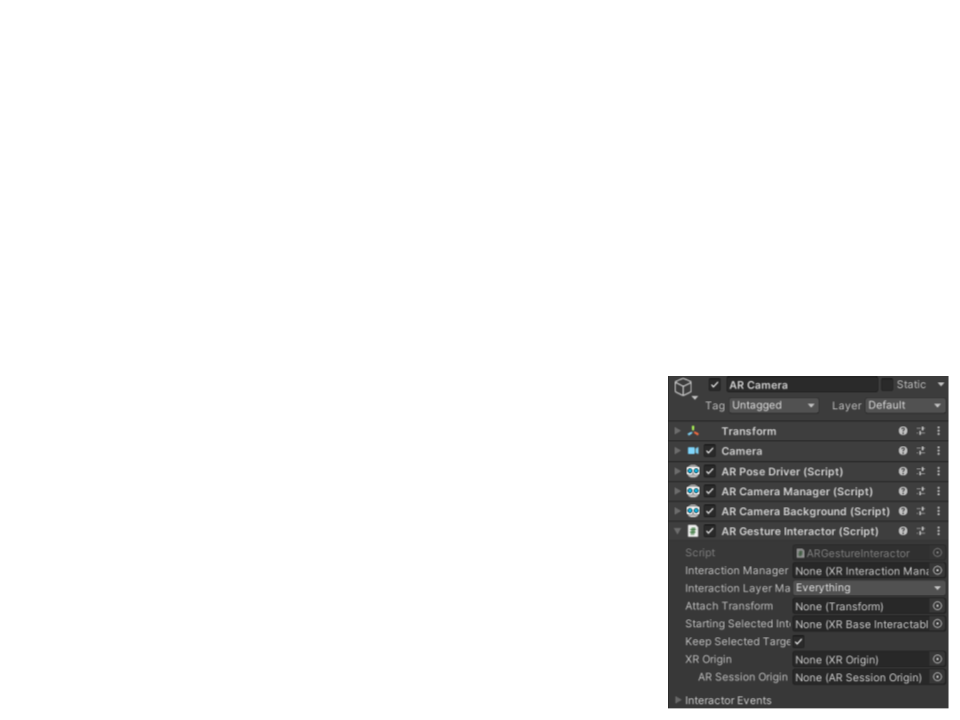

XR Interaction Toolkit for AR in Unity

•

•

Functionalities provided by the XR Interaction Toolkit for AR:

–

Gesture system to map screen touches to gesture events.

–

Translate gestures (place, select, translate, rotate, and scale) into

object manipulation.

Setup:

1

. Install the "XR Interaction Toolkit" in the

Package Manager (Window -> Package

Manager).

Add the package by name: com.unity.xr.interaction.toolkit

2

3

. Add an "AR Gesture Interactor" component to

the "AR Camera" GameObject.

. Add an "XR Interaction Manager" component

to the "AR Session" GameObject.

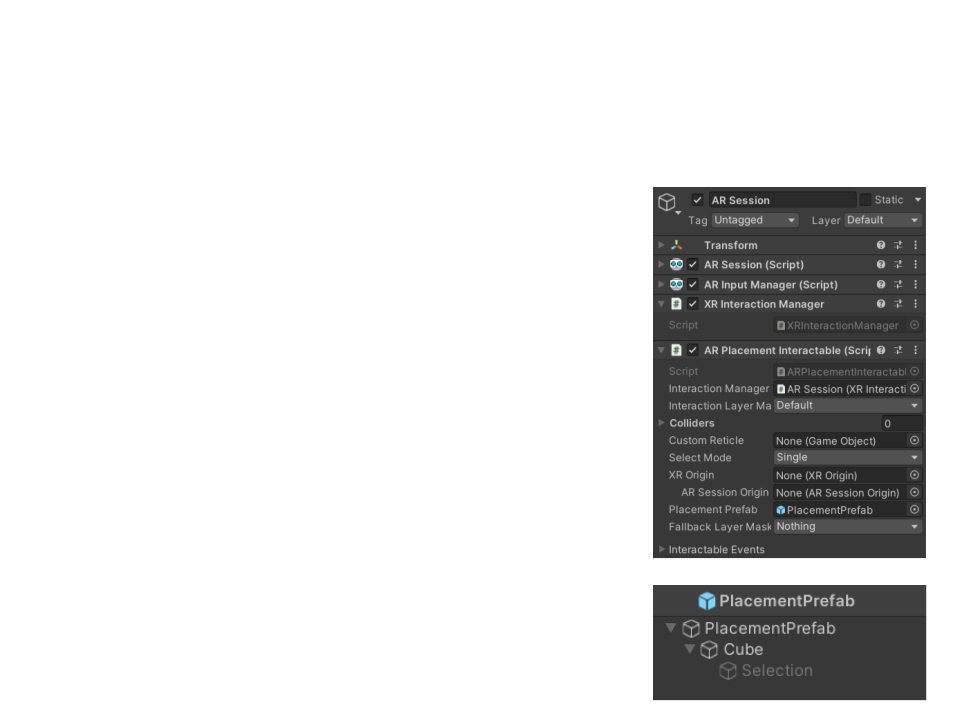

XR Interaction Toolkit for AR in Unity

•

Object Placement:

1

. Add an "AR Placement Interactable"

component to the "AR Session"

GameObject.

2

. Create a selectable "Placement Prefab":

a) Use an empty GameObject as main parent.

b) Add the 3D object as child and modify its position

until the center of its base is at origin.

c)

Duplicate the 3D object and add it as child of the

original object.

d) Increase the scale of the duplicated object (1.1).

e) Create and select a transparent material to the

duplicated object (to be used to indicate the

selection).

3

. Select the "Placement Prefab" in the "AR

Placement Interactable".

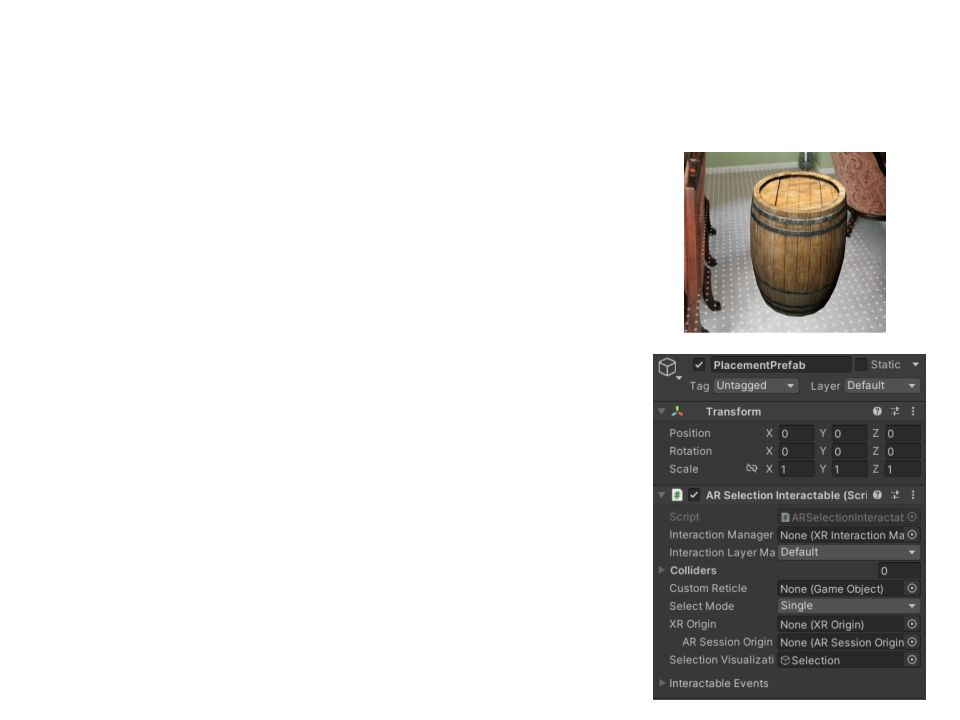

XR Interaction Toolkit for AR in Unity

•

Object Selection:

1

. Add an "AR Selection Interactable"

component to the "Placement Prefab".

2

. Select the GameObject to be used as

"

Selection Visualization" in the "AR Selection

Interactable“.

•

Use the transparent GameObject created in the

"Placement Prefab".

3

. Objects are selected/unselected by touching

them.

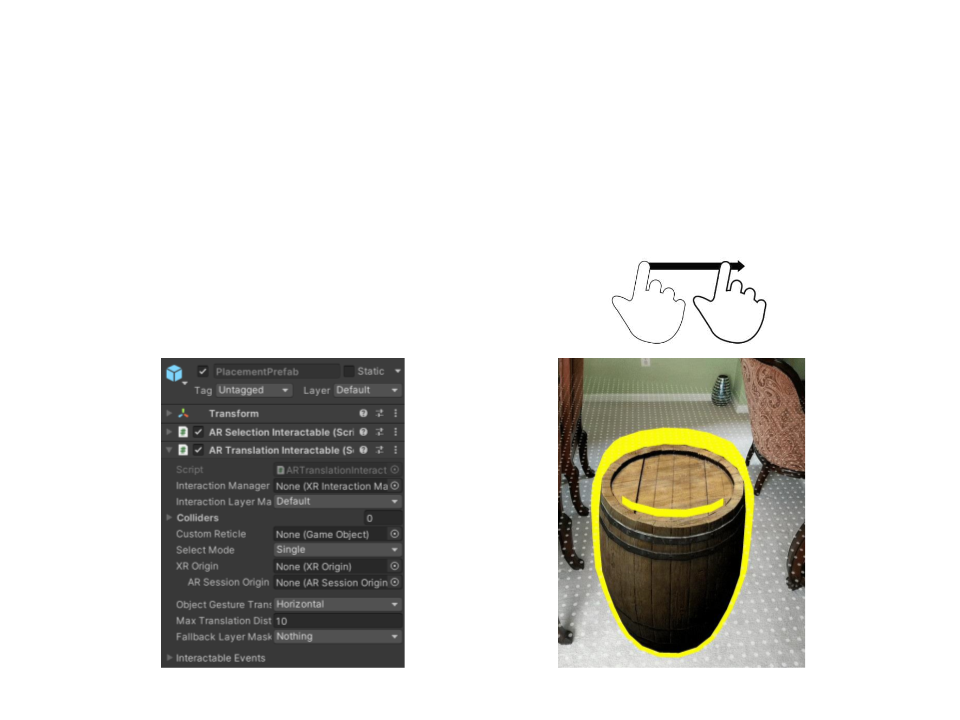

XR Interaction Toolkit for AR in Unity

•

Object Translation:

1

. Add an "AR Translation Interactable" component to the

Placement Prefab".

. Select and drag an object to translate it.

"

2

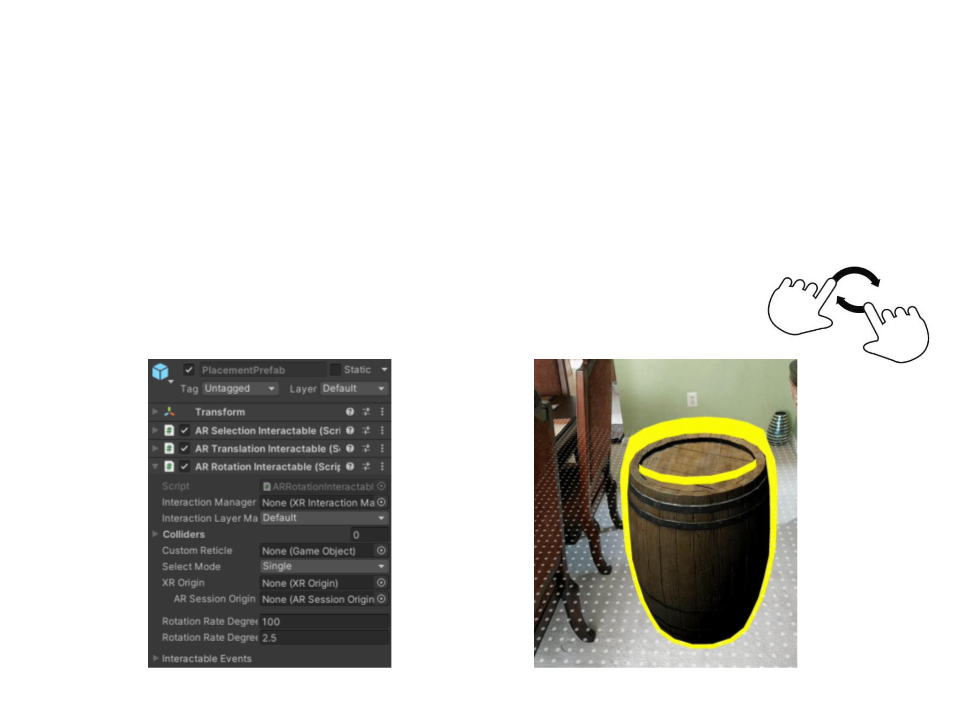

XR Interaction Toolkit for AR in Unity

•

Object Rotation:

1

. Add an "AR Rotation Interactable" component to the "Placement

Prefab".

2

. Select and rotate using two fingers.

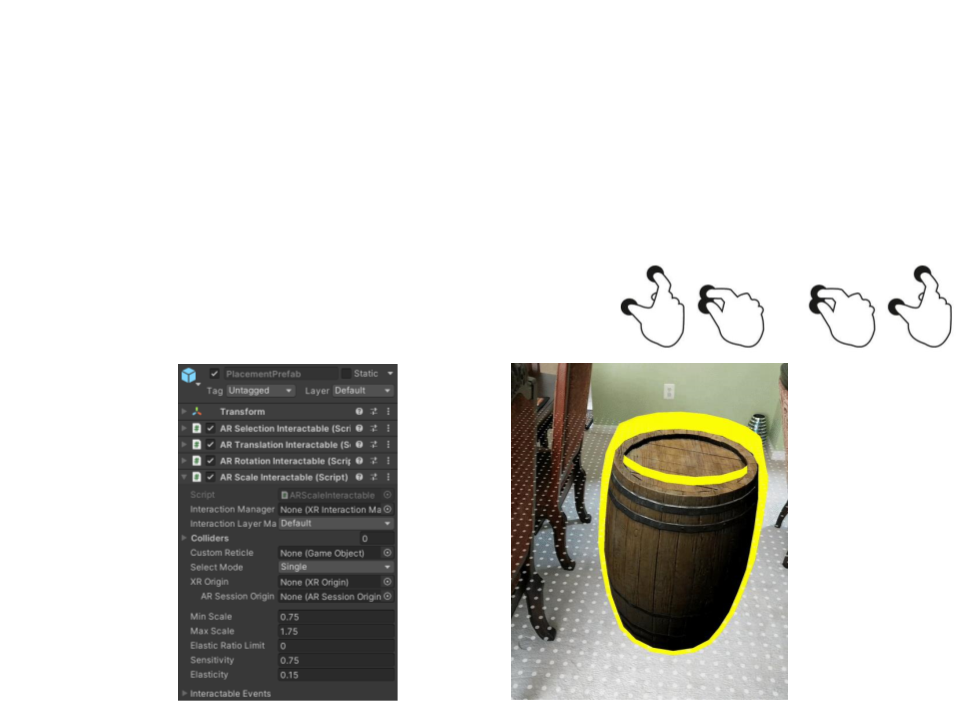

XR Interaction Toolkit for AR in Unity

•

Object Scaling:

1

. Add an "AR Scale Interactable" component to the "Placement

Prefab".

2

. Select and pinch/spread using two fingers.

Assignment 1

1

) Implement the process of using a paddle with markers to

select and pick objects.

–

–

The object must be selected when touched by the paddle.

Use two makers: one marker in one side of the paddle and another

maker in the other side.

•

The first marker is used to select objects. The second marker is used to pick objects.

–

The user must first touch the object with the first marker (selecting the

object), and then turn the paddle (showing the second marker) to pick

and move the object.

Further Reading

•

Schmalstieg, D., Hollerer, T. (2016). Augmented Reality: Principles and

Practice (1st ed.). Addison-Wesley Professional.

–

–

–

Chapter 7: Situated Visualization

Chapter 8: Interaction

Chapter 9: Modeling and Annotation